Last week, I wrote about a Meta ads test that I ran to compare traffic quality results based on three different optimizations. While I was confident in the results, some people questioned whether they were misleading because I used all placements.

I saw it as an opportunity to run another test. And after this one, I plan to run a third.

The short summary: The second test validates the results of the first. But it was still useful to run and see what would happen.

So let’s discuss what I did and found out…

Overview of Test #1

Before we get to the second test, a quick refresher is in order. For the first test, I created a campaign that compared the results generated by three different ad sets, each optimized differently:

- Link Clicks

- Landing Page Views

- Quality Visitor Custom Event (I wrote about how I created this event here)

The targeting for each was completely broad, using only countries (US, UK, Canada, and Australia). I was promoting a blog post, and the goal was to drive the most Quality Visitors.

The Quality Visitor custom event fires when someone spends at least 2 minutes and scrolls 70% or more on a page. Because I was promoting a blog post, I wasn’t looking for purchases or leads, but it was important that the ads drove engaged readers.

The test ran in two parts, one when delivery was controlled by an A/B split test and one when it wasn’t. While the A/B split test was more expensive overall, the results were consistent regardless.

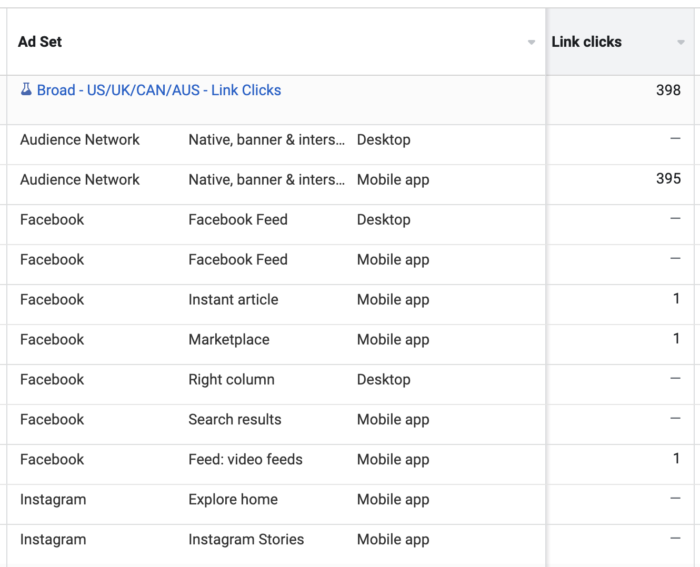

As you can see in the results above, optimizing for Link Clicks or Landing Page Views led to empty clicks. Very few of those clicks resulted in people spending two minutes and scrolling 70%. Of course, optimizing for that Quality Visitor event drove far more of those actions. It wasn’t close.

While it wasn’t the goal, I should note that only the Quality Visitor ad set drove newsletter registrations (it resulted in 5).

Not surprisingly, most of the clicks from the Link Click and Landing Page View ad sets were sent through the Audience Network placement, which can be problematic.

It was suggested the results may have been different if the focus were on only “high-performing” placements.

That led to Test #2.

Test #2 Setup

I have to mention that I thought the need to remove Audience Network kind of misses the point. The fact that most of the clicks go through Audience Network when optimizing for Link Clicks and Landing Page Views is proof, in my mind, that only the click matters with these optimizations. Any quality action is incidental.

Even if you focus entirely on “high-quality” placements, the algorithm still approaches it the same when optimizing for Link Clicks and Landing Page Views. The focus is on cheap clicks and nothing else. While there may be more quality visitors due to the placement, the algorithm won’t care.

But let’s see if that theory pans out…

I set up the test for the second campaign mostly as I set up the first. The first difference is that I didn’t bother with the A/B test this time. All that did was raise the price for each ad set.

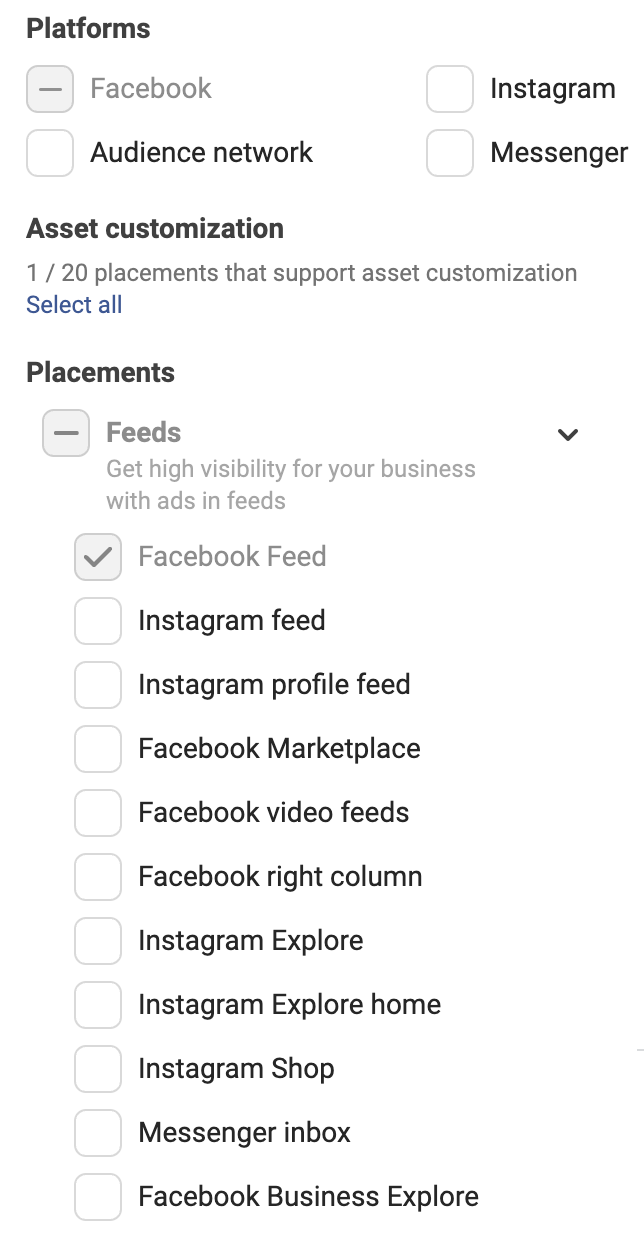

Of course, the other difference is related to placement. This time, I would only use News Feed.

Everything else would remain the same:

- One ad set optimized for Link Clicks, one for Landing Page Views, one for Quality Visitor Custom Event

- No custom audiences, lookalike audiences, or detailed targeting

- Only targeting used is by country (US, UK, Canada, Australia)

- Exclude people who already read the blog post

- Only news feed

I would spend about $100 per ad set. While that’s a small sample, it’s still enough to generate meaningful results when you can get a decent volume of clicks.

Test #2 Results

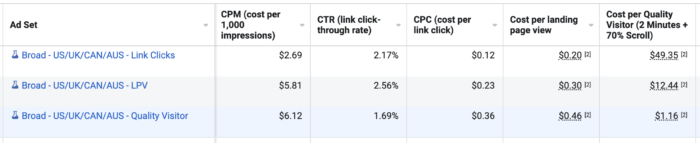

Here are the results from the second test.

A few observations…

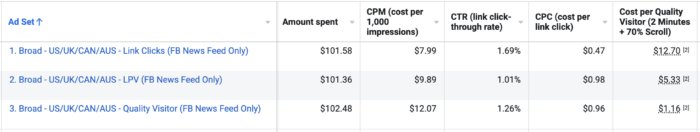

1. CPM is at least 2X higher across the board. This is not surprising since I was forcing the algorithm to only use News Feed, which is the most expensive placement.

2. CTR calmed down quite a bit. Again, not surprising when you toss out Audience Network. One interesting difference is that the CTR for the Quality Visitors optimization was actually better than the Landing Page Views optimization. I have doubts that this is meaningful but still interesting.

3. The Cost Per Link Click went up 3-4 times. One crazy thing is that CPC is the same for Landing Page View and Quality Visitor optimization.

4. Cost Per Quality Visitor clearly best for Quality Visitor optimization. I’m not surprised by this, but it’s still good to get confirmation. The costs when optimizing for Link Clicks and Landing Page Views did come down, but it’s still not close.

Also, a side note on incidental newsletter registrations. While purchases and leads weren’t the goals, the assumption is that a quality visitor is more likely to subscribe to my newsletter. As I mentioned earlier, that happened 5 times when optimizing for Quality Visitors in the first test, while it never happened when optimizing for Link Clicks or Landing Page Views.

In this second test, there were only two newsletter registrations, but they were again both when optimizing for Quality Visitors.

1-Day Click

Something I also wanted to look at was 1-day click attribution results. The reason is that it’s entirely possible that these results are inflated by incidental remarketing to people who would be coming to my website anyway.

Since the Quality Visitor metric isn’t unique, I also want to eliminate people coming back multiple times during the next 7 days. So, let’s focus only on 1-day click — or people who clicked the ad and performed this action within a day.

As you can see, the costs do go up some, but they actually go up for all three ad sets. The results remain clearly the best when optimizing for Quality Visitors. This is the best way to actually get Quality Visitors.

I’m not surprised by that. You shouldn’t be either. But it’s nice to see the confirmation.

New Questions

While I feel really good about optimizing for Quality Visitors for the purpose of driving highly-engaged readers, I can’t help but ask a couple more questions that I want to get answered.

1. How much of this is remarketing? Even though the targeting was broad, it’s entirely possible that the algorithm starts with my website visitors since they are most likely to perform this action.

2. Will results be impacted by using a 1-Day Click Attribution Setting? I wasn’t able to use a 1-Day Click Attribution Setting in the first tests because the Link Clicks and Landing Page Views optimization don’t allow that change to be made. But if I’m only running ad sets optimized for conversions (Quality Visitors), this will be possible.

The Next Test

So now, let’s run a new test based on these questions. I think it’s safe to throw out optimizations for Link Clicks and Landing Page Views. Those options clearly will not result in driving quality traffic.

This time, I want to test two different ad sets, both optimized for a Quality Visitor using a 1-Day Click Attribution Setting. This is something that could backfire, though. If I’m not getting results with the 1-Day Click Attribution Setting within a couple of days, I’ll reassess and may look to start over.

The difference in the two ad sets will be the targeting:

- Completely Broad in the US, UK, Canada, and Australia

- Completely Broad in the US, UK, Canada, and Australia, but EXCLUDING All Website Visitors (180 Days)

By excluding my website visitors in the second ad set — even those who haven’t fired the Quality Visitor event before — we’re going to get a much better sense of whom the algorithm is targeting with this type of optimization. There are a couple of potential scenarios:

1. The ad set that excludes my website visitors completely bombs. This would be evidence that even though I used broad targeting, it was mostly remarketing to my current audience.

2. The ad set that excludes my website visitors doesn’t bomb. If this is the case, it’s evidence that at least some (maybe more) of the Quality Visitors are from a cold audience, which would be pretty fascinating.

I don’t think either scenario is necessarily “good” or “bad.” Sure, it would be pretty cool to find out that the algorithm can find people who have never been to my website and who are likely to be quality visitors. It shows that the algorithm truly learns, even from a custom event that very few websites use.

But the main thing is that it would be nice to know one way or the other. Because if it turns out that it’s basically just going after my audience, it’s evidence that running remarketing campaigns the way we have in the past may no longer be necessary. Barring some exceptions (abandoned cart), the remarketing may be driven primarily by the optimization, not the audience.

I’m looking forward to finding out! Stay tuned.

Your Turn

What are your reactions to the latest test results?

Let me know in the comments below!