There are many different ways you can create a split test using Meta’s built-in testing tools. In this post, we’re going to walk through creating an A/B Test within the Experiments section.

This will allow you to test two or more campaign groups, campaigns, or ad sets against one another in a controlled environment to determine which performs best based on a single variable.

Before we get to creating an A/B test in Experiments, let’s cover a little bit of background…

Understanding A/B Testing

The concept of an A/B test isn’t as simple as comparing your results between two campaigns, ad sets, or ads. While you can do that, too, the results are unscientific.

What makes an A/B test different is that it eliminates overlap to help isolate the value of a particular variable (optimization, targeting, placements, or creative). The targeting pools will be split randomly to make sure that targeted users only see an ad from one of the variations.

Without a true A/B test, there is less confidence in how much a single variable impacted your results.

About A/B Tests in Experiments

My preferred method for creating A/B tests with Meta ads is with the Experiments tool. This allows you to select currently running campaigns or ad sets to test against one another.

This is different from the original approach to A/B testing with Meta ads. That method required you to create the variations that would be tested against one another. When the test ended, those ads stopped delivering. You would take what you learned from that test to create a new campaign.

With Experiments, you select campaigns or ad sets that are already running. When you select the schedule for your test, delivery will shift to prevent overlap during those days. Once the test is complete, the delivery of ads will go back to normal.

Create a Test

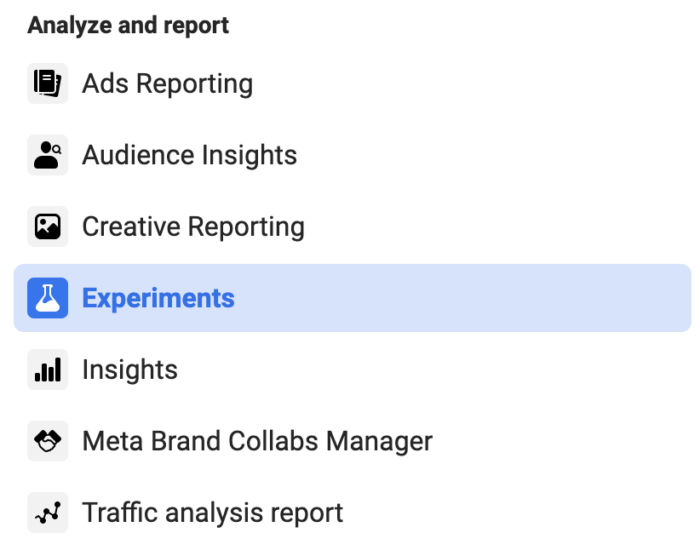

Go to the Experiments section in your Ads Manager Tools menu under “Analyze and Report.”

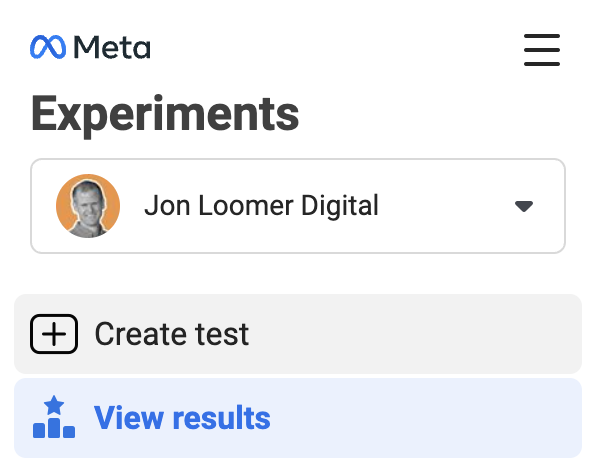

Click “Create Test” in the left sidebar menu (or the green “Create New Test” button at the top right).

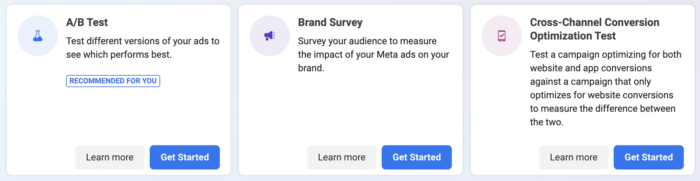

You will see options for the types of tests you can set up. Examples may be A/B Test, Brand Survey, and Cross-Channel Conversion Optimization Test.

We’re going to run the A/B Test. Click the “Get Started” button.

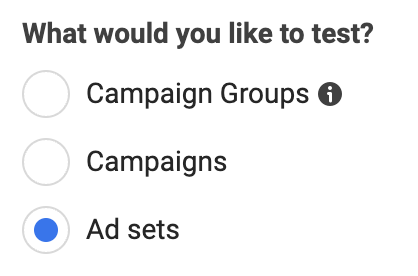

First, we’ll need to determine what we want to test: Campaign Groups, Campaigns, or Ad Sets.

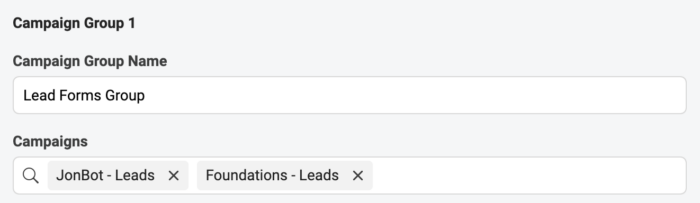

If you test campaign groups, you’ll see the cumulative results for each group of campaigns. You would name each group and select the campaigns that are within it.

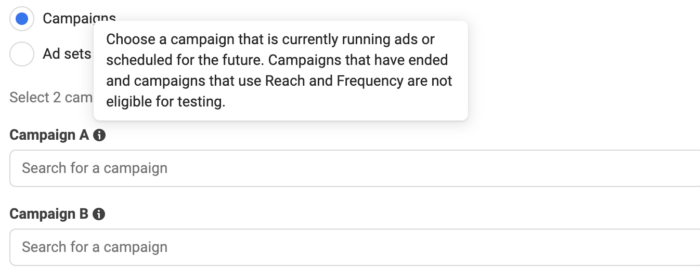

When testing campaigns, remember that all ad sets within each campaign count toward the results.

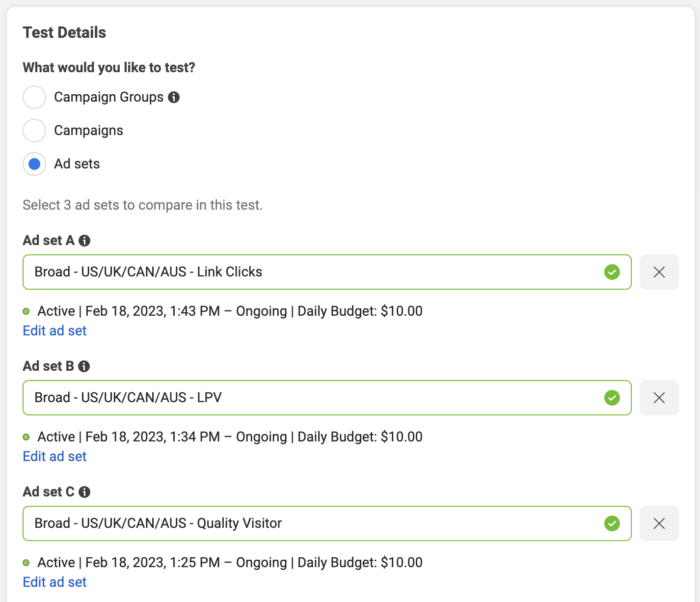

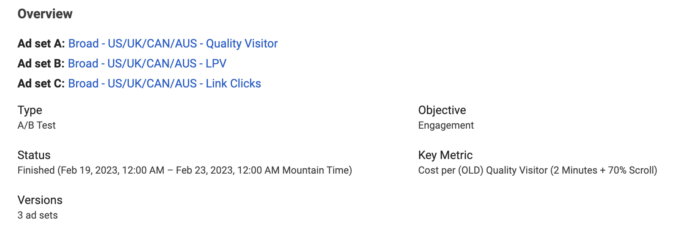

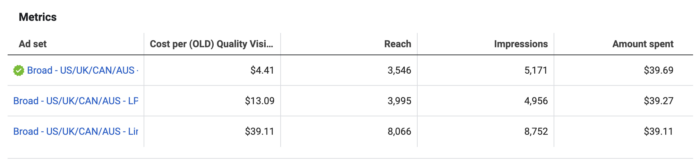

Here’s an example of testing three different ad sets…

Regardless of whether you test campaign groups, campaigns, or ad sets, you will need to select at least two variations and up to five. Meta strongly recommends that the variations that you select are identical except for a single variable. This helps isolate the impact of that variable.

In the example above, I’m testing three different ad sets that were optimized differently against a different performance goal. Everything else was set up identically: Targeting, placements, and creative.

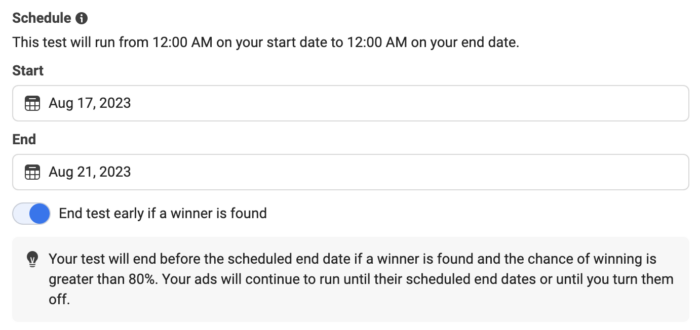

Next, schedule when you want this test to run. Keep in mind that these campaigns or ad sets are already running. This schedule will determine when the test will run.

If you want, you can have the test end early if a winner is found. Otherwise, the test will run for the length of the schedule that you select.

Name your test so that when you are looking at results you’ll easily remember what was tested.

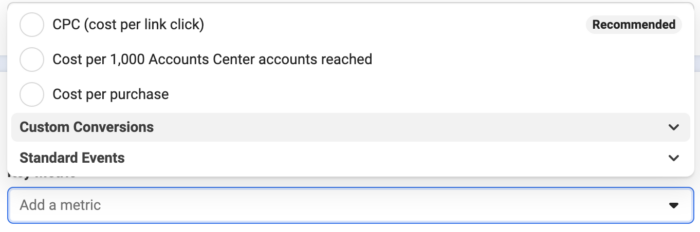

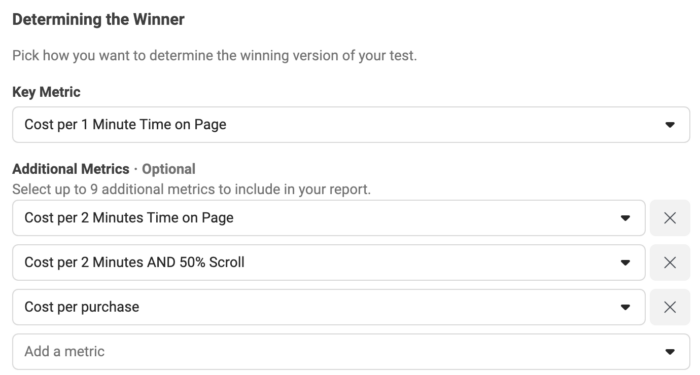

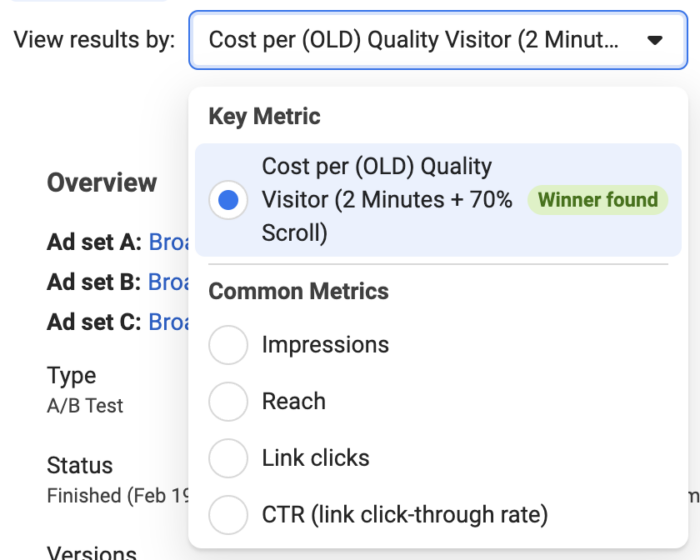

Finally, choose the key metric that will determine your winner. You can choose from some recommended metrics or from your custom conversions and standard events.

The metric you choose may not be the performance goal you used. The key metric should be whatever your ultimate measure of success is for these campaigns or ad sets.

You can also include up to nine “Additional Metrics.”

Unlike the key metric, these metrics won’t determine success. Instead, they will be included in your report to help add context.

View Results

Click “View Results” in the left-hand menu within Experiments.

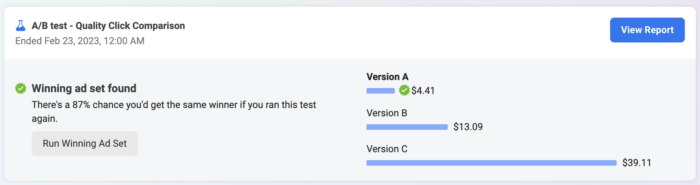

You will see highlights of the experiments that you have run or are running. This provides a snapshot of how each participant performed against your key metric.

Click “View Report” to see additional details.

You can view results by your key metric, additional metrics (if you provided any), or common metrics.

There is an overview of the participants in the test…

A table to compare metrics (this will include additional metrics if you provided them when setting up the test)…

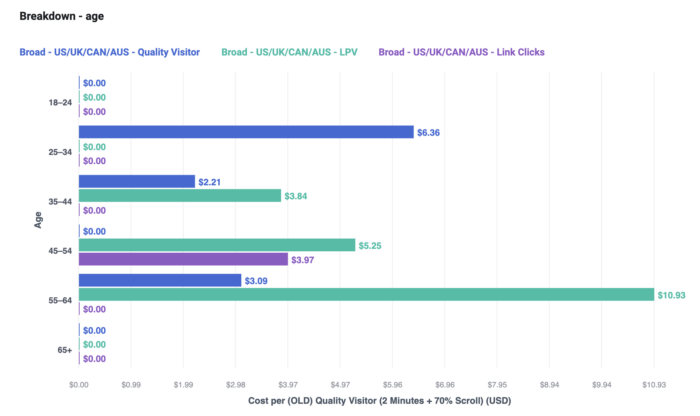

And a breakdown by age…

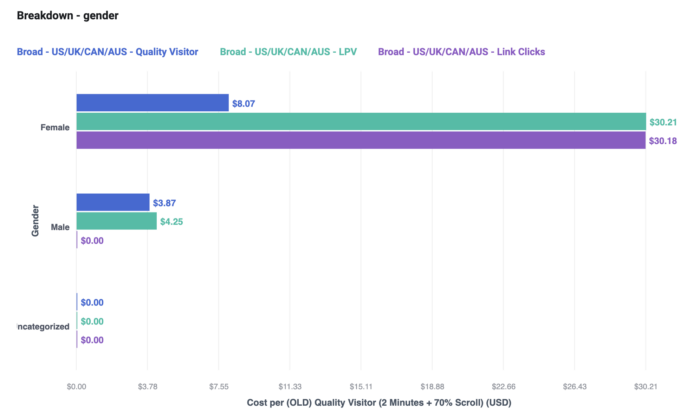

And gender…

A Note on Testing Results

Keep in mind that you have a single goal when running an A/B test: You want to figure out which variation leads to the results that you want based on a single variable.

Don’t be distracted by this goal. I’ve found that my results tend to be worse during A/B tests. This makes some sense since targeting will be restricted to prevent overlap.

What you do with the information learned from your A/B test is up to you. It’s possible that Meta will have low confidence in the results (you’ll see the percentage chance that you’d get the same results if you run the test again). In that case, you may not want to act on it.

But if confidence is high, you may want to turn off the variation(s) that lost and increase the budget for the winning variation.

Watch Video

I recorded a video about this, too…

Your Turn

Have you run A/B tests in Experiments? What have you learned?

Let me know in the comments below!